Feature Update July 2024

It’s July again, and all around the northern hemisphere people’s thoughts are starting to drift away from work and toward summer vacation. But here at Catalogic, the great millwheel of software innovation never stops turning! It’s been less than a month since our last feature update, but we had some major new features in development that we wanted to get out to users as soon as they were ready… and before our customers headed off to the beach, to the mountains, or just to the back yard. We think you’ll be as excited about these new features as we are, so take a few minutes to see what’s new before you go back to planning that summer getaway!

Backup of PVs without snapshots

The headline new feature in this release is the ability to back up PVs that do not support snapshots. This comes along with options that give users control over which backup methods should be used. Previously, CloudCasa generally required that PVs to be backed up support the CSI snapshot interface, and would always create snapshots before backups for data consistency. There were select exceptions to this rule, such as NFS PVs which were backed up live, certain cloud PVs which supported non-CSI snapshot mechanisms, and Longhorn PVs which had an advanced option to force live backup.

Snapshots are great for assuring data consistency, and backing up from snapshots is still the preferred method. However, snapshot support in Kubernetes is complicated. It requires that the external snapshot controller component be installed, as well as the CSI snapshots CRDs. These are not installed by default in many distributions. Worse yet, many storage technologies do not support snapshots, and even when they do their CSI drivers do not always support the full snapshot API. Also, popular non-CSI PV types such as “local” cannot support snapshots. These are often user in smaller configurations such as edge environments.

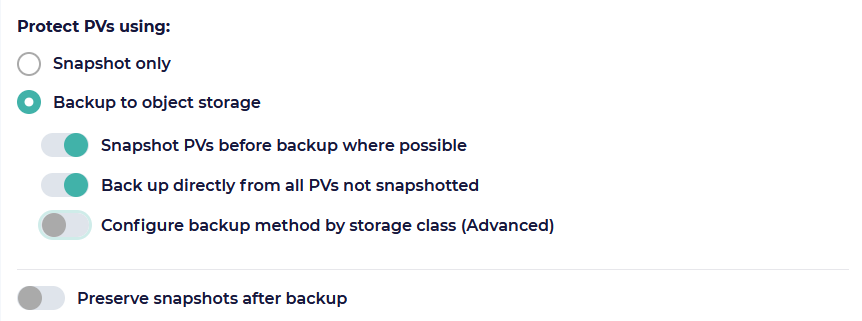

With this release, we have addressed the inconsistent availability of snapshots in customer environments by introducing significant changes to CloudCasa’s backup logic, but with only minor changes to backup definitions and the backup, migration, and replication wizards. Previously, when defining a backup job, you were prompted to select either “Snapshot only” or “Snapshot and copy” to protect PVs. You still have these choices, but now they are instead called “Snapshot only” and “Backup to object storage”. The first behaves the same as before, but if you select the second you are now given some additional options.

The first two are:

Snapshot PVs before backup where possible - Create snapshots of PVs that support them, and back up from the snapshots for consistency. This should generally be left enabled (the default) unless you experience problems with snapshot creation.

Back up directly from all PVs not snapshotted - All PVs not snapshotted will be backed up directly. This should generally be left enabled (the default) unless you do not wish to back up volumes which do not support snapshots.

Both of these options are enabled by default, and in most situations should be left enabled. With just the first enabled, CloudCasa’s behavior will be the same as before. With the second enabled, CloudCasa will also backup PVs which cannot be snapshotted by reading from the live volume.

An additional advanced option, Configure backup method by storage class allows you to manually select the backup method for each storage class defined in your cluster. The available methods are:

Read data from snapshot

Read data from PVC

Read data from underlying host volume

Skip

These same new PV options are also available when defining migration and replication jobs under the Advanced options section.

Note that Velero support is unaffected by this change. CloudCasa for Velero continues to support all PV types supported by Velero itself.

Separate retention option for retained snapshots

If you choose the Backup to object storage option, select a backup method that uses snapshots, and select Preserve snapshots after backup you will now be given the option to enter a separate retention period for the retained snapshots. Previously, CloudCasa always used the policy retention period (or the manually entered retention period in the case of “run now” backups) for retained snapshots as well as for the copies on object storage. That wasn’t always ideal, since often you may want to retain snapshot recovery points for a much shorter period than backup (a.k.a. copy) recovery points. If no value is entered, the snapshot retention period will default to the policy retention period as before.

Kubelet pods directory parameter added to cluster advanced settings

To support the new Read data from underlying host volume backup method, a new Kubelet pods directory parameter has been added to the Add/Edit Cluster wizard Advanced options section. This allows you to set the base path where CloudCasa will look for pod PV data when “Read data from underlying host volume” has been selected for a PV backup operation on the cluster. The path from which pod PVC data will be read is based on this path, and is fully specified as: <kubelet-pods-directory>/<pod-id>/volumes/*/<pvc-id>/mount. If the parameter is not configured it defaults to “/var/lib/kubelet/pods”, which should be correct for most clusters.

Longhorn-specific backup option removed

The Copy from live Longhorn PVs advanced option has been removed from the backup definition wizard, since its functionality has been superseded by the new Configure backup method by storage class option. This option allowed users to force backups to read from live Longhorn PVs rather than using snapshots, but this can now be done by simply selecting “Read data from PVC” for Longhorn storage classes.

Existing jobs that have this option set will continue to behave as before, but new job definitions will need to use the new options.

Concurrency and memory usage controls

A new performance-related control, Total concurrent files, has been added under Advanced options to the job definition wizards for backup, restore, migration, and replication jobs. In the restore wizard, it replaces “Concurrent files per PV”. Total concurrent files sets the number of files that will be backed up or restored concurrently across ALL PVs.

The setting that was previously called Concurrent streams has been renamed to Concurrent PVs. The number of files processed in parallel per PV will equal ( Total concurrent files / Concurrent PVs ). The default is 24 for backups and 16 for restores.

The Data mover memory limit setting has been added to backup jobs. Previously it was only available for restore jobs. It controls the maximum amount of memory that the data mover pod will be permitted to allocate during the backup process. This can be set between 512 MB and 8 GB. Higher values may be required if large settings for PV and/or file parallelism are used. By default it is set to 1024 MB.

Copy backups of clusters with no PVs

Previously, when a backup to object storage (a.k.a. copy backup) was run on a cluster with no PVs included, it would fail with a “skipped” status as a warning to users that no PVs were being backed up. This was an explicit design choice. However, since copy backups became the default it has proven to be confusing to users. It was also incompatible with how replication and migration jobs are now defined. So we have updated the logic so that copy backups of clusters that don’t include PVs will now succeed as normal.

As always, when defining a new backup job you should verify that everything you want has been backed up as expected after the first run. This is especially true if you are using complex resource selection criteria.

Backup & Restore of EKS Fargate Profiles

A new Fargate Profile page under the “EKS options” section has been added to the EKS restore wizard to allow restore of Fargate profiles when creating EKS clusters. These profiles are now backed up automatically whenever a backup is run on a EKS cluster in a linked AWS cloud account. Users are presented with a list of all the Fargate profiles that were saved in the backup of the source cluster, and are also able to set the IAM role for pod execution and select subnets. Users also have the option to remove the Fargate profile configuration to indicate that it should not be created during restore.

These changes also apply to migration and replication jobs.

Associating OIDC providers during EKS restore

OIDC provider configurations are now backed up automatically whenever a backup is run on a EKS cluster in a linked AWS cloud account. During EKS restore, users are now presented with a new drop-down on the AWS Info page in the “EKS Options” section of the restore wizard where they can optionally associate OIDC providers, if desired. Users can select one or more OIDC providers to associate with EKS cluster when creating a new cluster on restore.

These changes also apply to migration and replication jobs.

Backup & restore of IAM roles for EKS

IAM roles and related policies will now be backed up automatically whenever a backup is run on a EKS cluster in a linked AWS cloud account. This includes IAM roles for cluster, node groups, addons, and load balancers. When creating restore definitions, users will now be able to select the option Create new IAM role from backup in the IAM role selection drop-down. CloudCasa will then restore the IAM role including all the inline and attached policies for the role. Note that the trust relationship policy for the role will also be re-created from the backup, but may require changes to the principal after restore to be valid. IAM role restore is available for “EKS Cluster IAM role” in the AWS Info page, for “Node IAM role” in the Node Pools configuration page, and “IAM Role” for individual cluster add-ons in the Cluster Addons page. It is also available for “Pod Execution Role” in the new Fargate Profile section.

These changes also apply to migration and replication jobs.

AWS CloudFormation stack update

Our CloudFormation stack template has been updated in this release in order to support the EKS backup, restore, and migration enhancements. You’ll need to apply the new version (0.1.9) to any previously configured AWS cloud accounts in order to take advantage of these features.

To do apply the update, go to Configuration/Cloud Accounts. There will be a yellow attention icon next to the names of cloud accounts that need to have their CloudFormation stacks updated. For each cloud account that needs an update: #. Select Actions/Edit #. Then mouse over the Update available icon at the top and click re-launch stack. #. Log in to your AWS account and follow the cloud formation stack update instructions.

As part of this update, new permissions were added to support the new features. See Permissions required for AWS account linking for the updated list of all permissions used.

Kubernetes agent updates

In this update we’ve again made several changes to our Kubernetes agent to add features, improve performance, and fix bugs. However, manual updates shouldn’t normally be necessary anymore because of the automatic agent update feature. If you have automatic updates disabled for any of your agents, you should update them manually as soon as possible.

Notes

With some browsers you may need to restart, hit Control-F5, and/or clear the cache to make sure you have the latest version of the CloudCasa web app when first logging in after the update. You can also try selectively removing cookies and site data for cloudcasa.io if you encounter any odd behavior.

As always, we want to hear your feedback on new features! You can contact us using the support chat feature, or by sending email to support@cloudcasa.io.